January 20, 2020

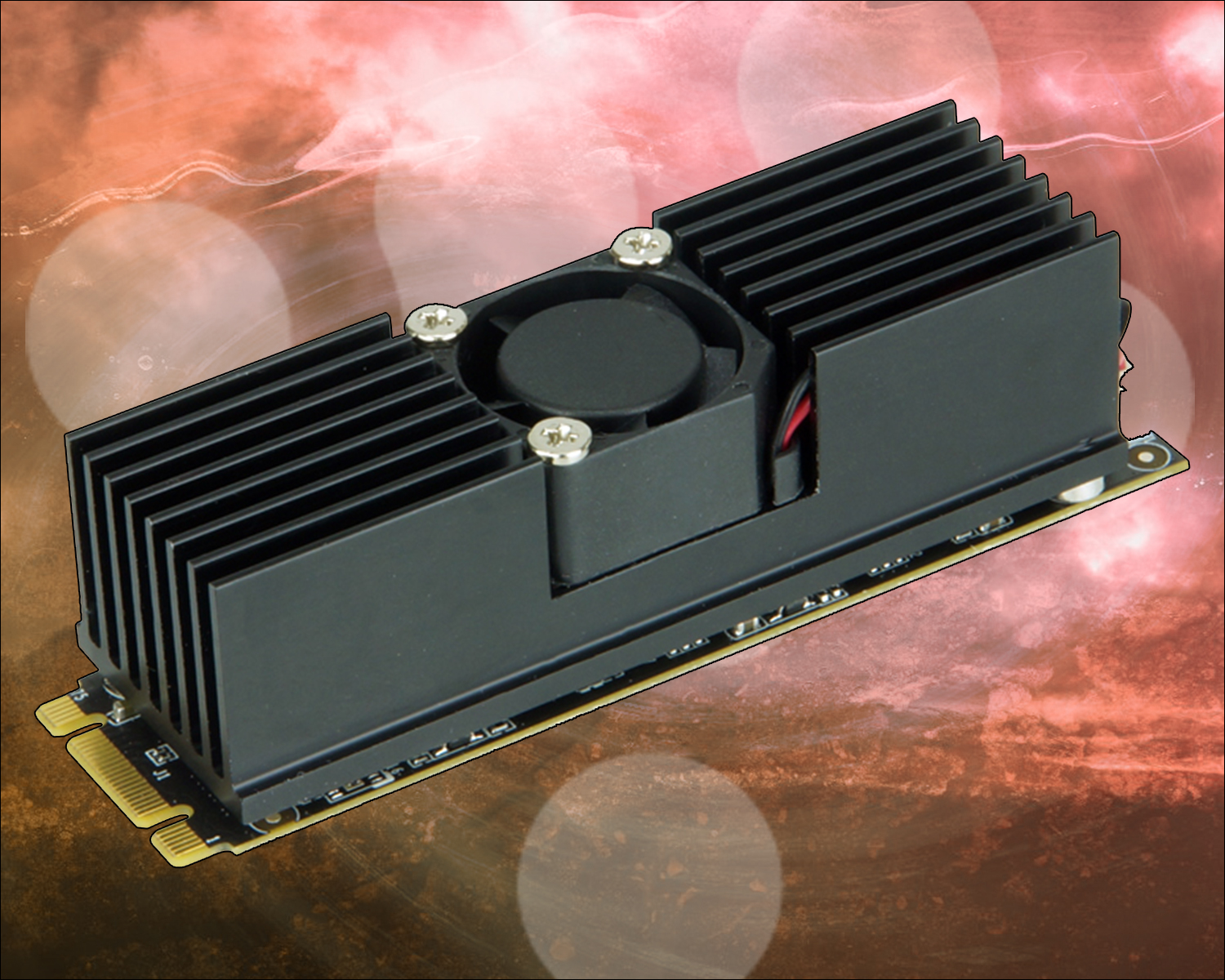

ICP Australia introduces iEi’s Mustang-M2BM-MX2 Deep Learning Inference Accelerating M.2 BM Key Card with 2 x Intel® Movidius™ Myriad™ X MA2485 VPU, M.2 Interface 22 mm x 80 mm.

The Mustang-M2BM-MX2 is a deep learning convolutional neural network acceleration card for speeding up AI inference, in a flexible and scalable way. Equipped with Intel® Movidius™ Myriad™ X Vision Processing Unit (VPU), the Mustang-M2BM-MX2 M.2 card can be used with the existing system, enabling high-performance computing without costing a fortune.

VPUs can run AI faster, and is well suited for low power consumption applications such as surveillance, retail and transportation. With the advantage of power efficiency and high performance to dedicate DNN topologies, it is perfect to be implemented in AI edge computing device to reduce total power usage, providing longer duty time for the rechargeable edge computing equipment.

“Open Visual Inference & Neural Network Optimization (OpenVINO™) toolkit” is based on convolutional neural networks (CNN), the toolkit extends workloads across Intel® hardware and maximizes performance. It can optimize pre-trained deep learning model such as Caffe, MXNET, Tensorflow into IR binary file then execute the inference engine across Intel®-hardware heterogeneously such as CPU, GPU, Intel® Movidius™ Myriad X VPU, and FPGA.

KEY FEATURES:

- M.2 BM Key Form Factor (22 x 80 mm)

- 2 x Intel® Movidius™ Myriad™ X VPU MA2485

- Power Efficiency, Approximate 7.5W

- Operating Temperature -20°C~60°C

- Powered by Intel’s OpenVINO™ Toolkit